Insert Data from SQL Server to Amazon Redshift Database Table using Copy Command

In this Amazon Redshift tutorial I want to show how SQL developers can insert SQL Server database table data from SQL Server to Amazon Redshift database using CSV file with Redshift SQL COPY command. SQL programmers will see how they can export SQL Server data into a comma seperated csv file and then how this file uploaded to S3 bucket can be imported into Redshift database table by executing SQL Copy command.

For the sake of simplicity our first example will be based on a simple SQL Server database table with one column.

Please note that the table does not have IDENTITY column. Inserting IDENTITY column values with COPY command requires additional parameter EXPLICIT_IDS

But for this Redshift SQL COPY command sample, database developers will not require this option.

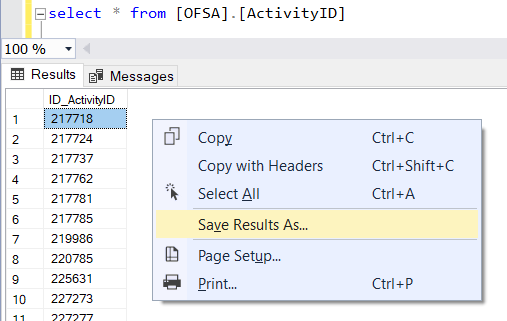

First, I query table data using SELECT command to enable you to see the data structure too. Although this is not a must, let's continue like this.

When the data that you want to transfer or insert into Amazon Redshift table is listed as output of the SELECT query on SQL Server Management Studio, right click on on context menu choose option "Save Results As..."

Since my data includes only numeric values, during CSV file creation I did not choose a specific encoding and directly saved the file as CSV (Comma delimeted) (*.csv) file.

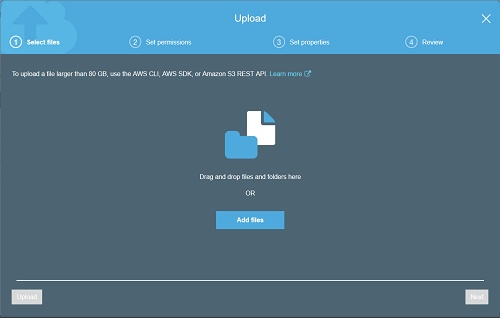

After data file in csv format is created upload file to an Amazon S3 bucket. You can use AWS Management Console for the file upload.

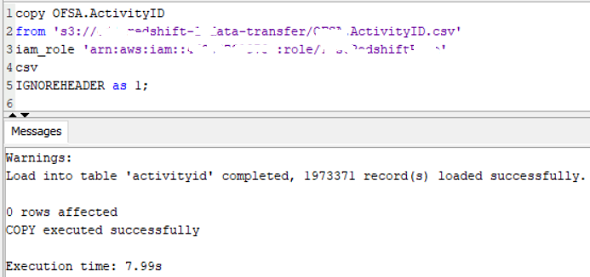

Now Amazon Redshift SQL developers can launch their favorite SQL Editor and execute following COPY command on target Redshift database

copy KODYAZ.ActivityID

from 's3://kodyaz-redshift-data-transfer/ActivityID.csv'

iam_role 'arn:aws:iam::26194621972:role/RedshiftS3ReadRole'

csv ignoreheader as 1;

Since the file format is CSV in SQL COPY command I used the csv file format option.

Additionally, since the exported data is saved with header line which contains the column names of the data, I used ignoreheader option with line number as 1

In the COPY command from parameter indicates the address of the file in S3 bucket.

The file path information is as follows "s3://bucketname/full-filename-path"

An important parameter for the COPY command is the IAM_ROLE which enables Amazon Redshift AWS service to reach and read files in S3 bucket.

On AWS Management Console, if you open Amazon Redshift service dashboard and list Clusters created in that region, when you select the target Redshift cluster and click on Manage IAM Roles button, you will be able to assign or display the IAM role attached to that Redshift cluster.

When using COPY command with this role, S3 service validates the incoming request for authorization to read CSV file in target bucket.

After the SQL command is commited the new entries can be displayed by executing SQL Select query on Redshift database table.

As a second example, I want to show how SQL Server database developers can export table data as CSV file.

And how Amazon Redshift SQL developers can use COPY command with "EXPLICIT_IDS" option to import data with Identity values from AWS S3 bucket.

Launch SQL Server Management Studio and connect to source SQL Server database.

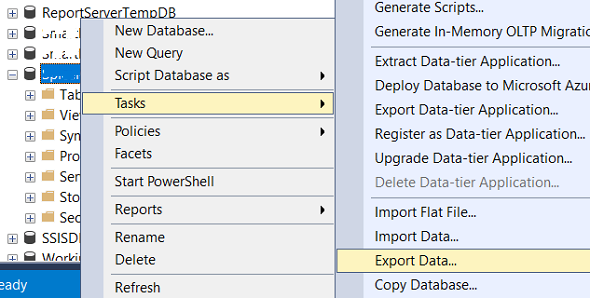

On Object Explorer window right click on database on context menu follow menu options Tasks > Export Data...

This will launch SQL Server Import and Export Wizard

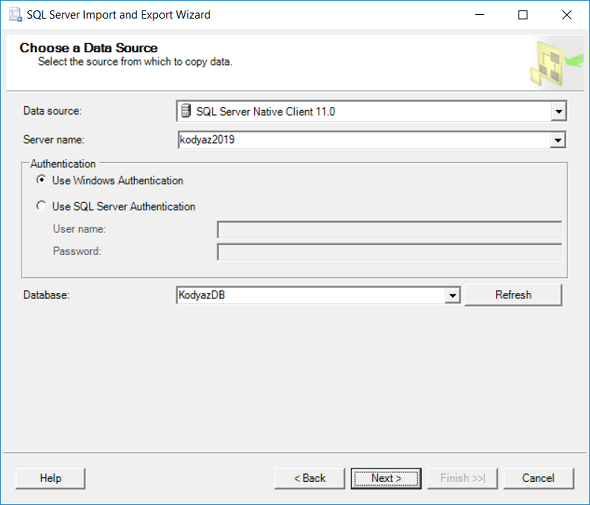

In "Choose a Data Source" dialog screen, choose "SQL Server Native Client 11.0" to connect to SQL Server instance name

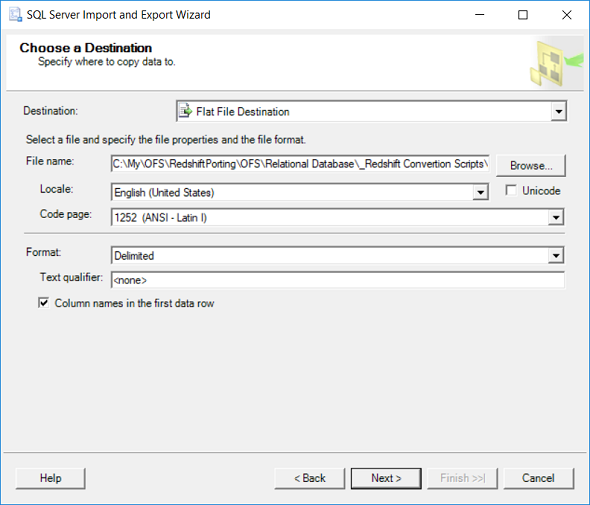

Second step is choosing the destination properties. Choose Flat File Destination for .csv file

Define the file name and file folder to save using "Browse" button.

Choosing the code page can be critical in some cases.

In Format option, for csv it is important to keep "Delimited"

Mark the "Column names in the first data row" to export column names with the csv file.

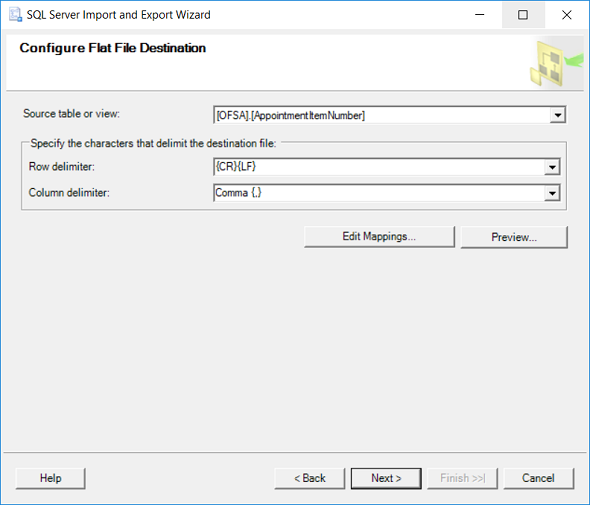

In next screen choose option "Copy data from one or more tables or views" and continue on following screen.

On "Source table or view" choose the database table or view that you want to export in .csv file

The comma character is chosen for column delimeter

Continue to following screens in Export Data wizard and run immediately.

When the .csv file is created on the target folder, upload the data file to Amazon S3 bucket using AWS Management Console

This CSV file contains identity column data.

So while using Amazon Redshift COPY command SQL developers should use EXPLICIT_IDS option to enable insertion of identity values.

In a Redshift database, SQL programmers can not explicitely provide a value for an Identity column in SQL data INSERT operations except COPY command.

copy KODYAZ.AppointmentItemNumber

from 's3://kodyaz-redshift-data-transfer/AppointmentItemNumber.csv'

iam_role 'arn:aws:iam::26194621972:role/RedshiftS3ReadRole'

csv

EXPLICIT_IDS

ignoreheader as 1;

Executing above SQL COPY command on target Amazon Redshift database will bulk insert csv data into target database table. After executing commit command on Redshift database, developers can query recently inserted data using SQL queries.

For more details and sample cases on SQL Server Import and Export Wizard and SQL Server export query results please refer to given tutorials.

In addition to above referred tutorial, please check official documentation on SQL COPY command in Redshift database.