Remove Duplicate Rows in Greenplum Database Table using SQL

In this Greenplum tutorial, SQL programmers can find a way to remove duplicate rows in Greenplum database table. Assume that in ETL or ELT process or during data ingestion, you had imported or inserted duplicate rows accidentally into Pivotal Greenplum database table as an SQL developer or database administrator. Using the method shown in this method it is possible to remove duplicate records in Greenplum database tables.

Assume that you have a table named vbkd in your Greenplum database schema with some rows inserted multiple times.

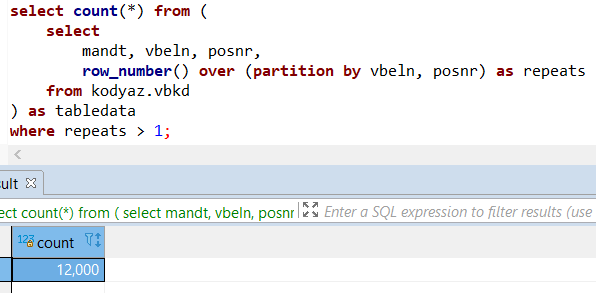

By executing following SQL Select statement, database developers can find the number of duplicated rows. Here it is important to add the fields that makes a row unique in your expected database table. If everything was perfect and uniqueness of table rows was provided then vbeln and posnr column combination should be unique. So I identify duplicate records by counting rows in partition to these fields.

select count(*) from (

select

mandt, vbeln, posnr,

row_number() over (partition by vbeln, posnr) as repeats

from kodyaz.vbkd

) as tabledata

where repeats > 1;

SQL Row_Number() function with Partition By clause enables database developers to identify repeating rows easily.

It is important to put columns that define uniqueness of a row in the Row_Number function Partition By clause.

I have 12 thousands of data rows duplicated in this sample database table.

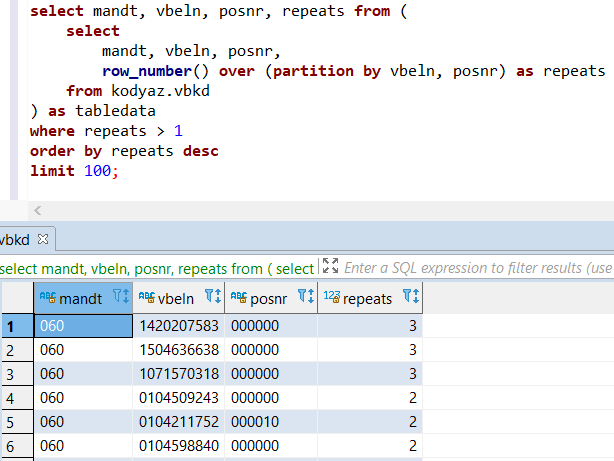

Following SQL query provides the list of duplicate rows and the count of same rows in the sample table or the repeat counts of that row

select mandt, vbeln, posnr, repeats from (

select

mandt, vbeln, posnr,

row_number() over (partition by vbeln, posnr) as repeats

from kodyaz.vbkd

) as tabledata

where repeats > 1

order by repeats desc

limit 100;

SQL developers can see that a few rows have 3 times inserted into source table and rest of the duplications are inserted twice

First step to get rid of duplicate rows is creating a staging table which will store unique rows

Following CREATE TABLE syntax can be used to create the same table structure for a new database table name.

CREATE TABLE kodyaz.vbkd_stage (LIKE kodyaz.vbkd);

It is time to insert non-repeated rows or unique rows by filtering the "repeats" column for field value equals to 1

This time, I have listed all the field names in the database table.

By listing all column names and excluding repeats calculated field, the field numbers and fields match between source table and the staging table.

"where repeats = 1" enables SQL developer to insert only once for each row in source table into staging table eliminating repeated or duplicate rows.

insert into kodyaz.vbkd_stage

select

mandt,

vbeln,

posnr,

konda,

kdgrp,

...

mndvg

from (

select

*,

row_number() over (partition by vbeln, posnr) as repeats

from kodyaz.vbkd

) as tabledata

where repeats = 1;

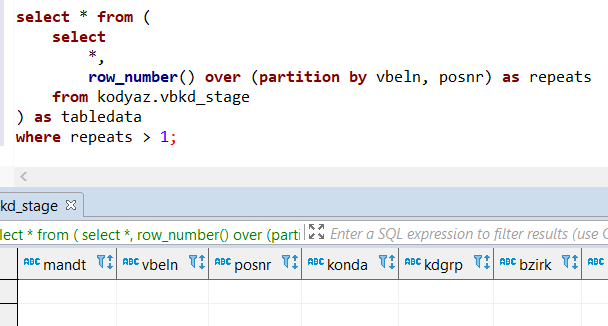

If all work is correct, checking the duplicate records on staging table will return empty resultset.

select * from (

select

*,

row_number() over (partition by vbeln, posnr) as repeats

from kodyaz.vbkd_stage

) as tabledata

where repeats > 1;

Ok, we are fine up to this step.

Since we have a healthy copy of the source table, we can truncate it as follows:

truncate table kodyaz.vbkd;

And we can copy staging table content into source table as follows

insert into kodyaz.vbkd select * from kodyaz.vbkd_stage;

Be sure that all data is correct now on source table, duplicate rows are cleaned and deleted successfully, etc.

Then Greenplum database SQL developer can drop staging table to keep the data platform clean.

drop table kodyaz.vbkd_stage;

I hope this method helps Greenplum database developers to unify and remove duplicate rows in a Greenplum database table using SQL. This method is quite fast and applicable for database table where data sizes are not too big compared to your Pivotal Greenplum cluster. Of course, if there are performance problems, storage limitations, etc then this method for removing duplicate rows can be difficult to handle.