Connect Amazon S3 from Data Virtuality Studio using S3 Connector

Data Virtuality is one of the major data virtualization tools in the market. In this Data Virtuality tutorial, I want to show how SQL developers can query csv files stored in Amazon S3 buckets. First of all, as a logical data warehouse platform, Data Virtuality has a big number of data connectors including Amazon S3 connector which enables data providers to extend their data sources to Amazon S3 object repository. Using CSV Query Builder, without writing any SQL code it is possible for developers to discover the data and its metadata properties, preview and build SQL codes automatically that can be used within a SQL view. The SQL views can be used to build a logical data model within the data virtualization abstraction layer for the data consumers.

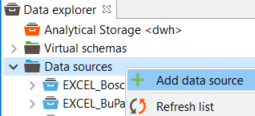

Let's start. First of all logon to target Data Virtuality instance with user who has permission to create a new data source on the data virtualization tool.

When you have logged on to the target server within Data Virtuality Studio (IDE for Data Virtuality), on Data Explorer window, right click on "Data Sources" node

Choose the "Add data source" context menu option.

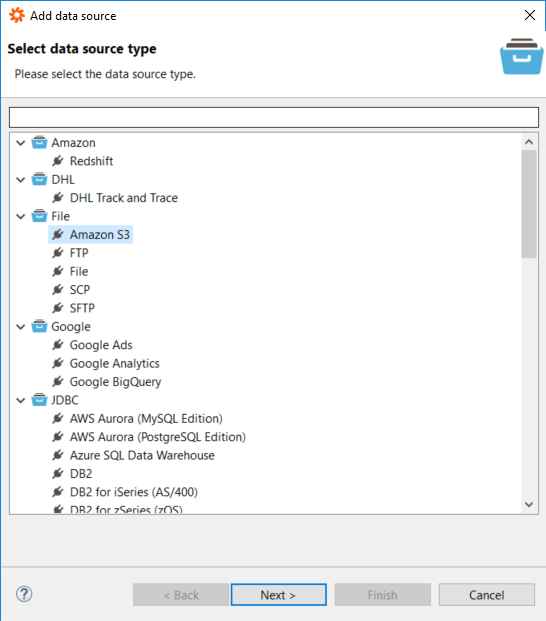

On the screen where you define the target data source type or where you select the connector type, select Amazon S3 as your new data source type

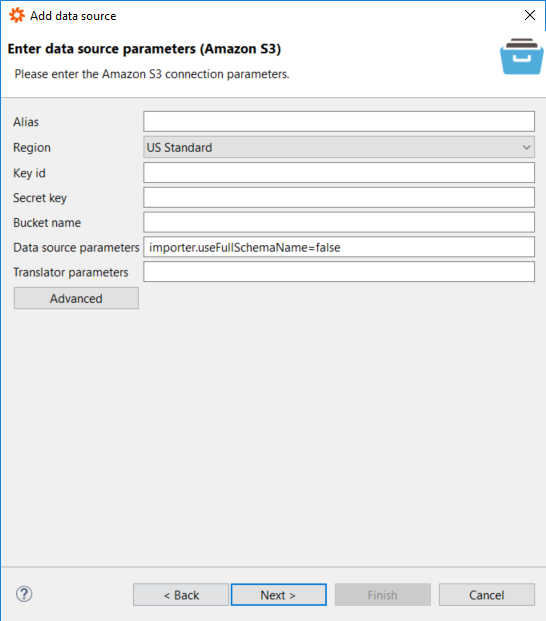

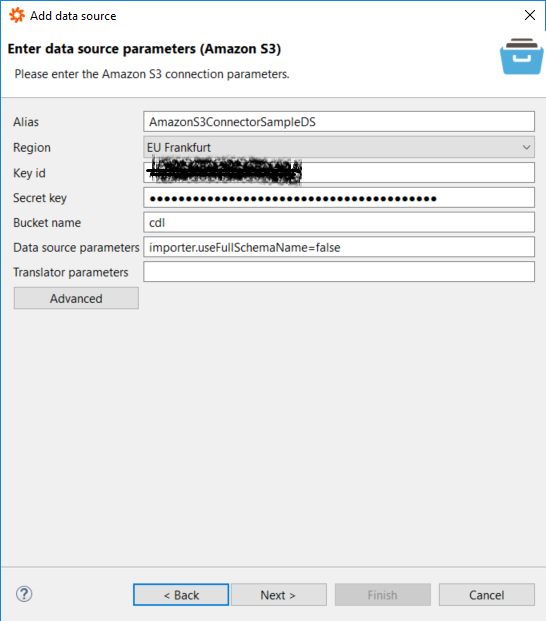

When you continue to the next screen of the wizard named "Add data source" screen, type missing data for the below entries for a successfull Amazon S3 connection.

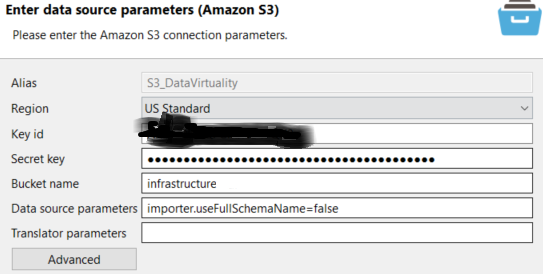

Following sample Amazon S3 connections within Data Virtuality are showing that you can create different data sources using the root S3 bucket names.

After the Amazon S3 bucket is defined as a data source in Data Virtuality Studio, you can easily create virtual schema views for different sub-folders or for different files in that AWS S3 bucket.

The "Alias" is up to the developer.

The SQL developer or Data Virtuality admin can give a name to he new Amazon S3 bucket data source according to the naming convention followed.

It might be important to follow a previously defined naming convention especially to distinguish the data source type, data source and main usage or data coverage of the source.

Region should be chosed according to the region where the AWS S3 bucket is created.

Although the use of Amazon S3 buckets are global and is not limited with a single region, the developer should select the region associated with the S3 bucket from the combobox.

Key id and Secret key information will be used for authentication and authorization during accessing from Data Virtuality SQL queries to Amazon S3 bucket data files.

You can create an IAM user and key id - secret key pair which has access to required Amazon S3 buckets to use in the Data Virtuality Amazon S3 connector connection.

Since these information will provide access to S3 data files, it is important to keep them secret and not to publish on a common platform.

In Bucket name provide the AWS S3 bucket where you want to create SQL views on top of its data files.

The Data Virtuality Amazon S3 connector will provide SQL developers to execute SQL queries to filter and read data stored in provided S3 bucket.

In following steps, you will see how you can create different views within that single S3 bucket for different file types.

After the connection parameters are provided click Next button to continue for S3 connector definition.

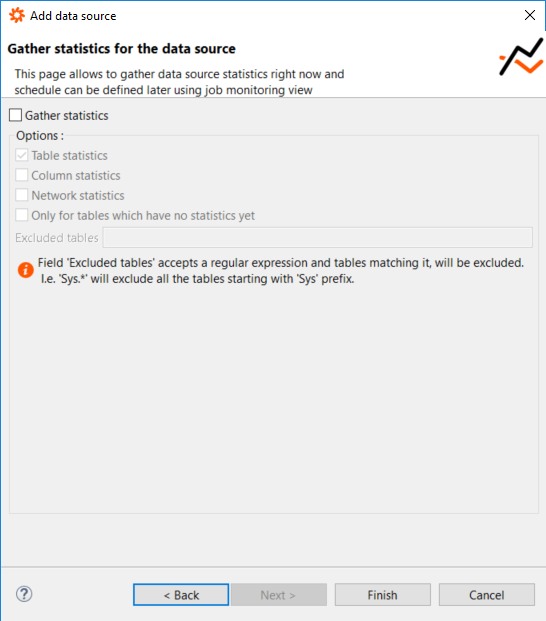

If you mark the checkbox "Gather statistics" you can then provide detailed statistics collection option like table statistics, column statistics, network statistics, etc for the objects on that data source.

Although collecting statistics is a workload to Data Virtuality instance, it may certainly provide performance gain in your SQL queries.

After you made your decision about collecting statistics, click on Finish button.

If you will see the "Data source added." message, then you have successfully created the Amazon S3 data source connection to selected S3 bucket using the S3 connector.

Click OK to end the process of creating a new data source to Amazon S3 bucket within Data Virtuality Studio.

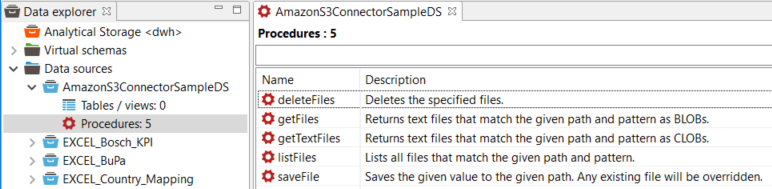

Under Data Sources node, you will see new Amazon S3 data source listed as follows with a number of procedures created by default.

Actually there are 5 procedures.

deleteFiles: Deletes the specified files.

getFiles: Returns text files that match the given path and pattern as BLOBs.

getTextFiles: Returns text files that match the given path and pattern as CLOBs.

listFiles: Lists all files that match the given path and pattern.

saveFile: Saves the given value to the given path. Any existing file will be overridden.

Using these procedures SQL developers can list the files and folders in the Amazon S3 bucket. It is possible to get file details and contents of each file, too.

Before we continue with next steps required for querying S3 file contents, let me share you the sample csv files I used in this tutorial.

You can download the cities.csv file and upload it to a folder named SampleData under target S3 bucket

An other data file which you can download for test is schools.csv. Please upload the downloaded file to the Amazon S3 bucket as a second sample data file.

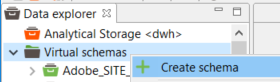

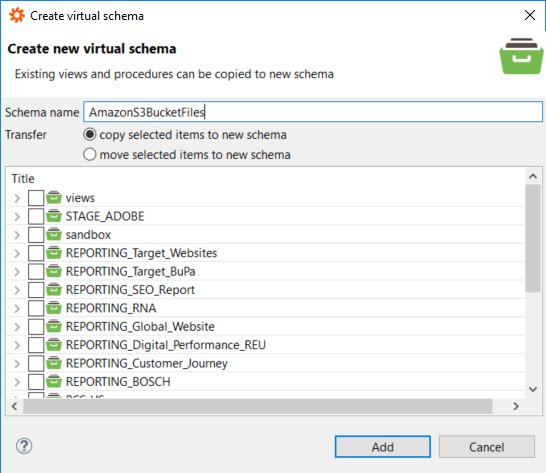

Now you can either create a new virtual schema or use one of the schemas under the "Virtual schemas" node within Data Explorer window

Within the data virtualization layer, virtual schemas are building blocks of your data model with the creation of views within these virtual schemas.

Now SQL developers who will build the data model for operational or BI reporting can launch a new SQL editor using the "Open SQL editor" icon to start creating new SQL queries and views.

CSV Query Builder

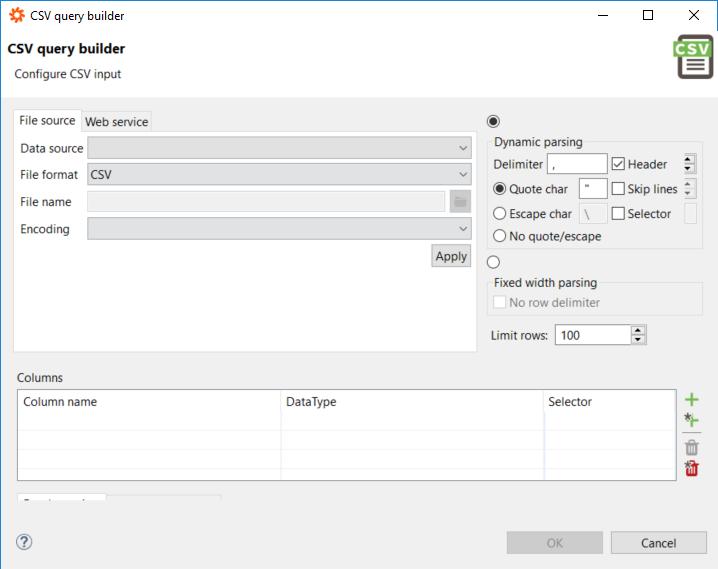

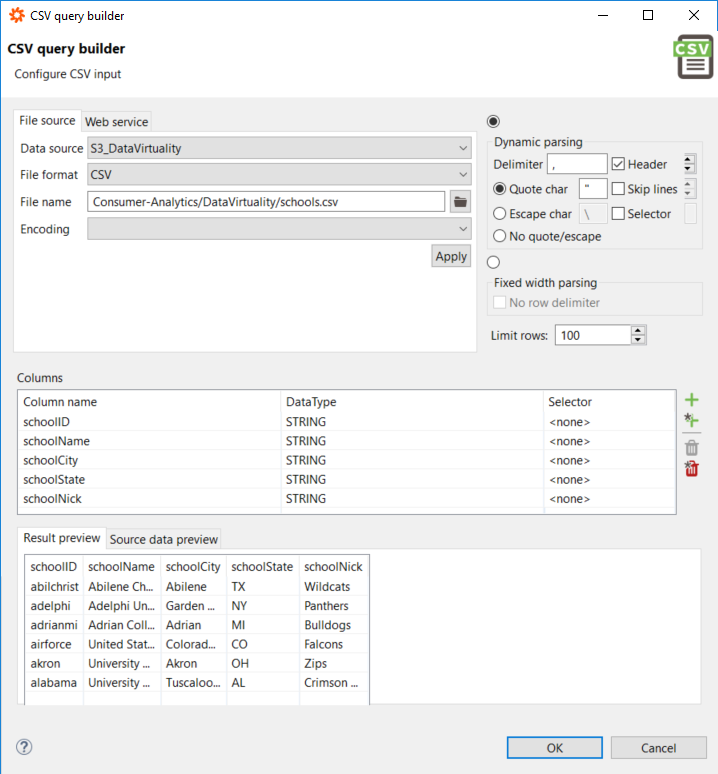

Since we keep csv files within these sample AWS S3 buckets, we can easily discover the metadata of the files using CSV Query Builder and preview the file contents parsing by given parameters.

Open CSV Query Builder on a new SQL editor screen.

![]()

SQL developers can query comma separated value data within CSV files using the CSV Query Builder build-in tool within Data Virtuality Studio

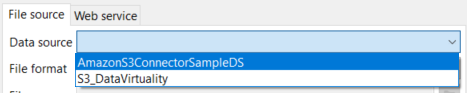

Using the combobox, you can choose the target data source which is created in that Data Virtuality server using the Amazon S3 connector.

Keep the file format as CSV with its default value.

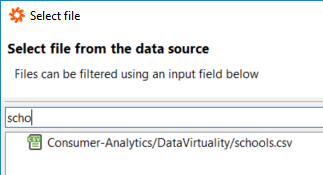

Click on browse icon next to "File name" textbox to choose the file to query

The filter screen first displays all files within the bucket using the S3 connector procedures listFiles, etc. It is possible to filter files by using partial names of the target file.

call "S3_DataVirtuality.listFiles"();;

Click on the file and press Select button to continue

If you click Apply button, the selected CSV file will be parsed for its metadata (columns and data types of each column) according to the options defined on the "CSV Query Builder" screen. Additionally top 100 rows as defined in "Limit rows" parameter will be displayed as a preview of the parsed text file.

If you click OK button, it will prepare a SQL Select statement similar to following

SELECT

"csv_table"."schoolID",

"csv_table"."schoolName",

"csv_table"."schoolCity",

"csv_table"."schoolState",

"csv_table"."schoolNick"

FROM (

call "S3_DataVirtuality".getFiles('Consumer-Analytics/DataVirtuality/schools.csv')

) f,

TEXTTABLE(

to_chars(f.file,'UTF-8')

COLUMNS

"schoolID" STRING ,

"schoolName" STRING ,

"schoolCity" STRING ,

"schoolState" STRING ,

"schoolNick" STRING

DELIMITER ','

QUOTE '"'

HEADER 1

) "csv_table";;

Developers can execute above SQL SELECT statement to display all data.

Additionally the SQL script can be converted into a SQL View as follows within a Virtual Schema.

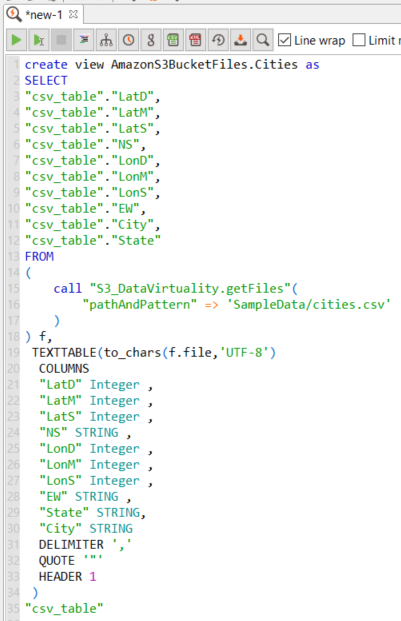

Following script is for the Cities.csv file

A SQL developer or a logical data warehouse developer can build a logical data model by creating new SQL Views based on previously created SQL views

A carefully desinged data model can be published for consumers' use in order to build reports required by them

create view AmazonS3BucketFiles.Cities as

SELECT

"csv_table"."LatD",

"csv_table"."LatM",

"csv_table"."LatS",

"csv_table"."NS",

"csv_table"."LonD",

"csv_table"."LonM",

"csv_table"."LonS",

"csv_table"."EW",

"csv_table"."City",

"csv_table"."State"

FROM (

call "S3_DataVirtuality.getFiles"(

"pathAndPattern" => 'SampleData/cities.csv'

)

) f,

TEXTTABLE (

to_chars(f.file,'UTF-8')

COLUMNS

"LatD" Integer ,

"LatM" Integer ,

"LatS" Integer ,

"NS" STRING ,

"LonD" Integer ,

"LonM" Integer ,

"LonS" Integer ,

"EW" STRING ,

"State" STRING,

"City" STRING

DELIMITER ','

QUOTE '"'

HEADER 1

) "csv_table" ;;

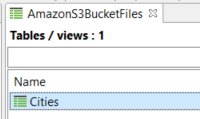

After you execute the above SQL Create View statement successfully, you can close the SQL editor

If you refresh the tables/views list under the target virtual schema, you will see the new view object is now listed for other developers to use

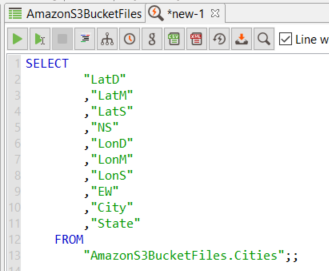

If you double click on any object in a list like tables / views list or procedures list, the execution SQL script is displayed on the current SQL editor screen if at least one is open. If there is not any open SQL Editor screen, the SQL command to run the procedure or display data from table or view will be displayed in a new SQL Editor screen.

By a double click on Cities view will launch a new SQL editor screen with the SQL SELECT statement displayed as follows

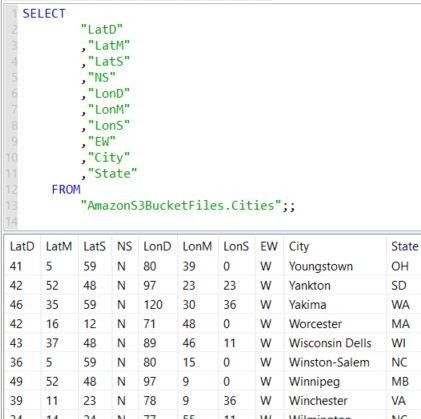

Run the SELECT SQL statement displayed on SQL editor screen automatically in order to display the virtual schema view data

For more on CSV Query Builder please refer to official documentation from Data Virtuality