Frequently used Linux Commands during AWS Operations

This Linux commands tutorial shows a list of frequently used Linux commands during AWS operations like to copy file from Amazon S3 bucket to a directory or folder on EC2 instance, or creating a new directory on Linux, renaming a file, etc. These Linux commands with sample uses will help AWS DevOps engineers' daily life easier and help them complete their tasks faster. You can search the web for full syntax and other usage parameters of a Linux command shared in this guide for better understanding and for gaining a full expertize on these Unix tools.

Copy Files from AWS S3 Bucket into Current Directory

To copy files which are stored in an AWS S3 bucket, linux users can execute following command to copy those files into current directory.

aws s3 sync s3://aws-datavirtuality/driver .

Please note "s3://aws-datavirtuality/driver" is the Amazon S3 bucket name and folder name where the files in that folder are copied into the current folder identified by "." (dot) in the command.

If you type a text instead of ".", if there is a subfolder with that name it will be copied to there otherwise a new sub-folder will be created with the files copied into this new target folder.

![]()

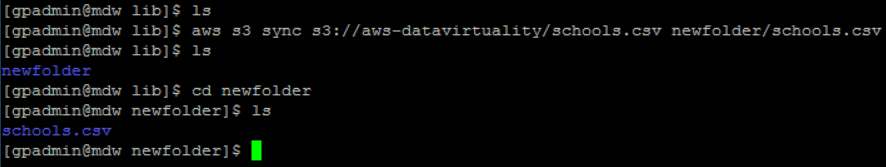

Copy File from AWS S3 Bucket Folder into a new Subfolder

An a Linux EC2 instance, if you want to copy a file from Amazon S3 bucket folder into a new subfolder, following AWS S3 command can be used.

aws s3 sync s3://aws-datavirtuality/schools.csv newfolder/schools.csv

This sample command copies the schools.csv file from Amazon S3 bucket s3://aws-datavirtuality/

Then a new directory is created under existing path with the name "newfolder"

As last step original schools.csv file is written into new file directory with the name "schools.csv" (in this case the name is not altered)

Here is how the above commands are executed

Copy Single File from AWS S3 Bucket into Current Folder

In order to copy a single file from AWS S3 bucket folder into the current folder, following sample COPY command code can be used.

aws s3 cp s3://mybucket/filefolder/myfile.jar myfile.jar

You can alter the name of the file in above command too

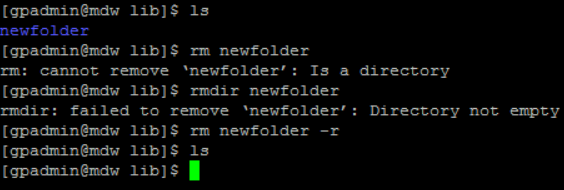

How to Delete a Directory or Folder on an EC2 Instance

To delete a file, "rm" command can be used.

Please note the name file is typed after the "rm" command.

rm newfolder

Of course if the "newfolder" is not a file but a sub-folder then following error occurs:

rm: cannot remove ‘newfolder’: Is a directory

In this case we can use an other command.

To delete an empty subfolder, "rmdir" command is used.

Please note the name of the sub directory is typed after the "rmdir" command.

rmdir newfolder

If the subfolder is not empty, an other exception is thrown.

rmdir: failed to remove ‘newfolder’: Directory not empty

We can force and delete the subfolder with its contents using "-r" argument to the "rmdir" command as follows

rm newfolder -r

This will delete the sub directory "newfolder" and recursively its all content

ImportError: No module named awscli.clidriver

While I was trying to execute awscli commands like "aws s3 sync" command, I got the exception message: ImportError: No module named awscli.clidriver

This error means the awscli is not installed on the EC2 instance.

To install the awscli library following "pip install" command can be executed on that Linux EC2 instance

pip install awscli

pip will install the awscli package enabling AWS DevOps engineers to execute AWS commands and access AWS resources via these commands easily.

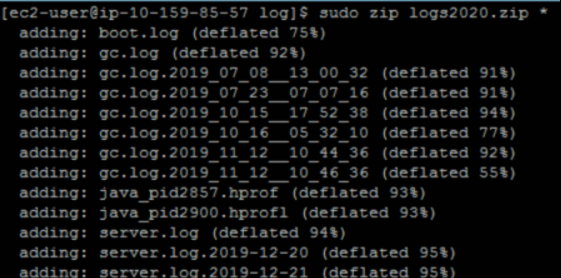

Compress Contents of a File Folder

Enter into the file directory that you want to compress its contents.

Assume that I want to compress server logs using "zip" command.

The server log files are stored in a folder named "log"

Executing below command will compress all files stored in current file folder into a new zipped file named logs2020.zip

sudo zip logs2020.zip *

Following screenshot shows how I recently used zip command on an EC2 Linux server.

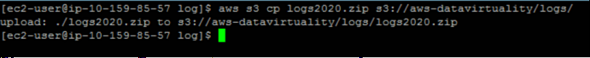

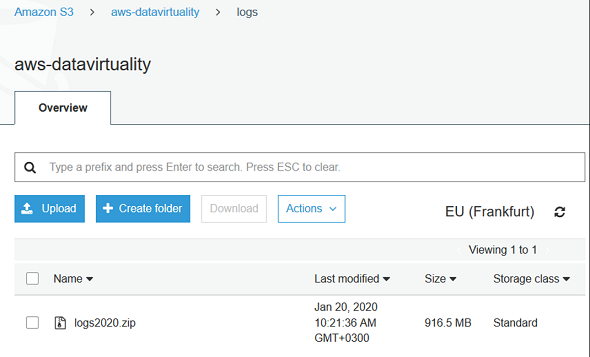

Copy Single File to AWS S3 Bucket Folder

To copy a single file which is stored in a folder on EC2 an instance to an AWS S3 bucket folder, followin command can help.

First I navigate into the folder where the file exists, then I execute "AWS S3 CP" copy command.

aws s3 cp logs2020.zip s3://aws-datavirtuality/logs/

Above Copy command will copy the "logs2020.zip" named file which is in current folder into S3 bucket "aws-datavirtuality" in "logs" folder with the same name.

The file can be seen in S3 bucket now

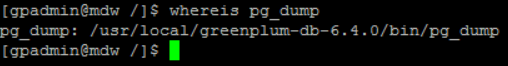

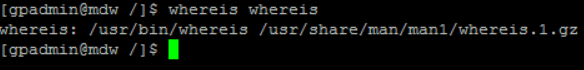

Search for specific file on EC2 Server using Linux whereis Command

When you are developing and configuring your application on your EC2 instance, sometimes you may require to find a file and its full path on the Linux server. The "whereis" command enables Linux administrators and EC2 users to locate the files, identify the location of the searched files on the server.

Here are two basic examples showing how to use whereis command.

First example is searching for the whereis utility itself and displaying it location.

whereis whereis

The other sample is a search for the standard PostgreSQL pg_dump utility which is used for database backups, scripting database objects for DDL objects and/or data, etc. pg_dump is also supported by Pivotal Greenplum database.

whereis pg_dump