AWS Glue Job with SAP HANA Database Connection

Using AWS Glue ETL developers building integration applications on cloud can connect SAP HANA databases for data operations like reading, writing and updating data using JDBC connection.

In this AWS Glue tutorial, I want to demonstrate how ETL (Extract, Transform and Load) developers can connect SAP HANA database using custom JDBC driver for HANA database connections.

On the web there are only a few articles about AWS Glue and SAP HANA database connection, so I wanted to publish this article to describe sections which are unclear on others and to be an alternative sample on the topic.

Before we start, I want to note something that confused me a lot. Maybe you will be understand what is confusing and what I mean at the end of this AWS Glue tutorial.

In fact, when I create a connection I assume that I define the drivers, JDBC driver jar files and connection properties like host, port, user credentials, etc at connection step.

On the other hand, for custom connection drivers like in our case, the SAP HANA database driver file is referenced within the Glue script. So database connection drivers are in the Glue job step not in connection step.

If you accept this uncommon steps in connection definition order, then you can apply this scenario where we create a connection for a SAP HANA database, to other data platforms requiring custom JDBC driver files.

Amazon S3 Configuration for AWS Glue Job

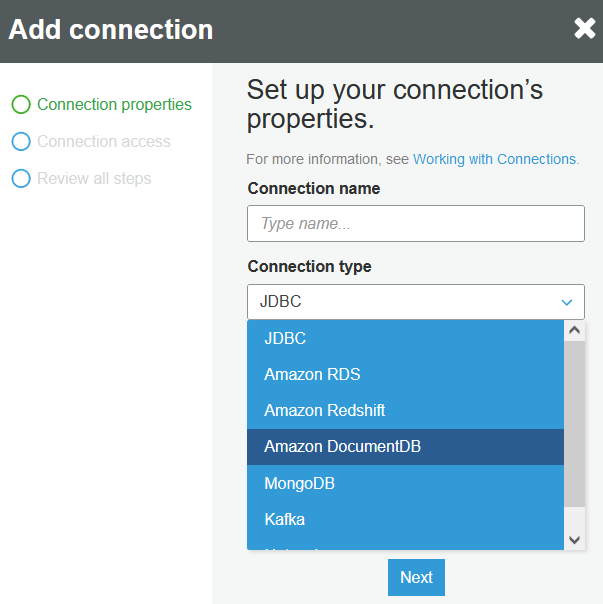

AWS Glue supports build-in connections to various data platforms like Amazon RDS, Amazon Redhift, Amazon DocumentDB, MongoDB, Kafka, etc. In addition to above, using JDBC connection type, ETL developers can point to a JDBC connection driver file (jar file) stored on an Amazon S3 bucket and use it for connecting to any data platform.

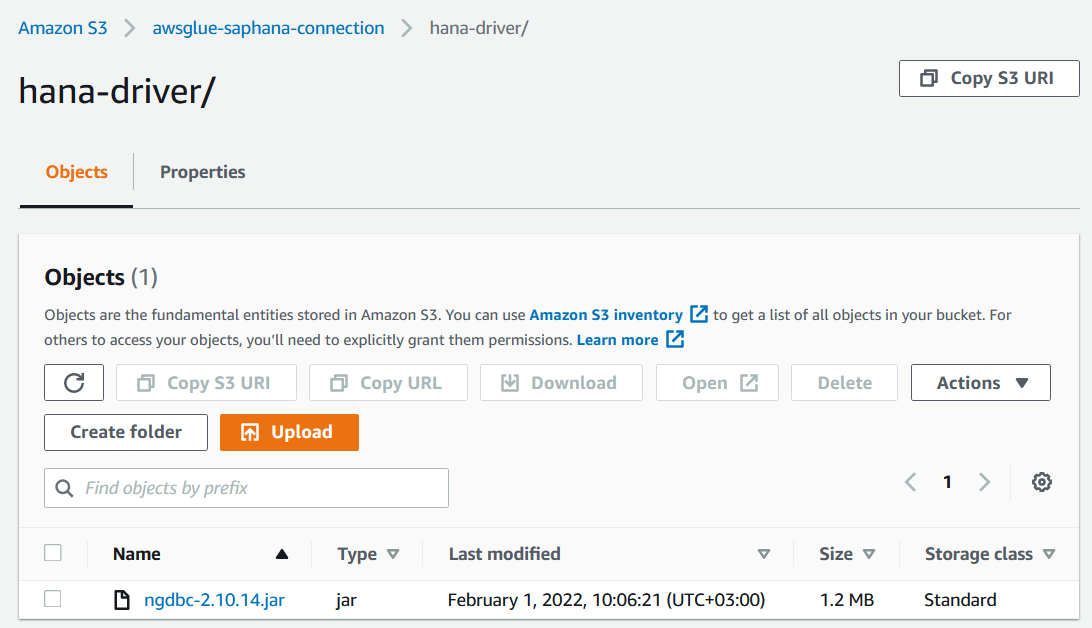

In this tutorial, we will upload HANA database driver file (ngdbc-2.10.14.jar) to an S3 bucket and use from AWS Glue job.

Create a new Amazon S3 bucket with default settings. I created one S3 bucket named "awsglue-saphana-connection"

Create a sub-folder named "output" where the Glue job will put the data in CSV format

Create a second sub-folder named "hana-driver" to store the JDBC driver for SAP HANA database.

Upload the .jar file of the ngdbc.jar or in my tutorial the driver file ngdbc-2.10.14.jar for SAP HANA 2.0 as seen below

IAM Role for AWS Glue Job

As the second step, we should create a new IAM role with permissions to read Amazon S3 bucket and execute Glue jobs.

So launch the IAM service dashboard on AWS Management Console.

From IAM roles, create a new IAM role.

Leave default selected "AWS service" for the trusted entity type.

Select "Glue" service from the list of AWS services for your use case displayed on the screen.

Continue to next screen for permissions.

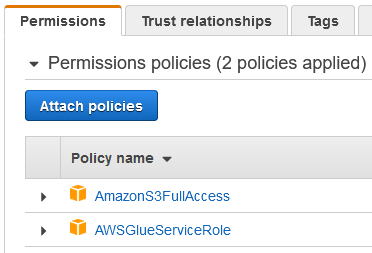

Attach following permissions: AmazonS3FullAccess, AWSGlueServiceRole

Finally named the name IAM role, for example IAMRoleForGlue2HANAConnection

Please note that we have attached build-in policies which are granting wide permissions to new IAM role.

With granular permissions like limiting access to only recently created S3 bucket is an example of applying limits to IAM policies and roles in order to apply grant least privilege security principle.

SAP HANA Database Connection Details

This sample Glue job connects to a HANA database and reads data from a HANA table/view.

In order to connect to target SAP HANA database we need to know some connection details like host name or IP address, port number, database name, credentials including user name and password, also the table or view name to read data from.

The JDBC url format details can be read from the SAP HANA Client Interface Programming Reference via Connect to SAP HANA via JDBC.

Basically the JDBC url should be formed as follows for HANA databases:

jdbc:sap://hanadatabasehost:port/?databaseName=dbname

Since we will provide the credentials via some other parameters we don't need to pass these credential details via JDBC url

The HANA database host name or IP address details and the port number, you should be getting from your HANA database administrators.

Since the host name is not resolvable from AWS account in my case I will be using the IP address of the HANA database server which is on-prem and connected to my AWS account via VPN

Again in my scenario, I will be using the port number 30215.

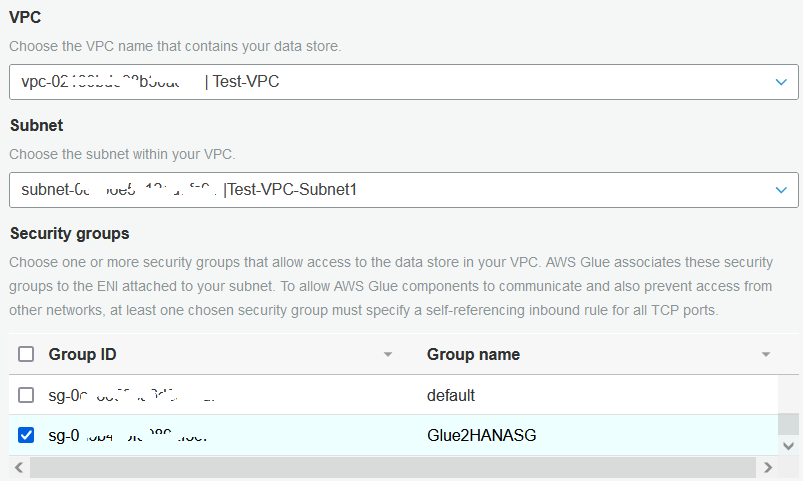

VPC Subnet and Security Group Settings

When the Glue job is executed, there will be a network traffic from the AWS resource where Glue job is running on and the target HANA database.

So choose from your network setting on VPC dashboard where you want your Glue job to be executed.

Since there will be a network connection from those VPC subnets to HANA database, if your HANA database is connected via VPN or Direct Connect be sure that you have selected VPC and subnets where this connection exists.

If you choose a VPN which is not connected to VPN gateway then you won't be able to connect to your on-premise HANA database with your Glue job.

After VPC and subnets are chosen, we need to create a new Security Group

On VPC Dashboard, choose Security Groups from left menu.

Click on "Create security group"

Give a name to your security group, for example Glue2HANASG

Choose the suitable VPC for network connection.

Add Inbound rule for all traffic from anywhere as starting point. In fact, you can limit this to the IP address of your HANA database and to the port where the communication is done.

We can keep the default Outbound rule which allows all outgoing traffic. Of course it is also possible to limit this to specific IP addresses or ranges and limit to some certain ports.

Create Database Connection for AWS Glue

Now with the connection details collected in previous step, we can define a Connection object in AWS Glue console which ww will be using in following steps.

Now launch AWS Glue dashboard on AWS Management Console.

Click on Connections under Databases

Click on "Add connection"" button

Define a "Connection name"

Choose JDBC as connection type

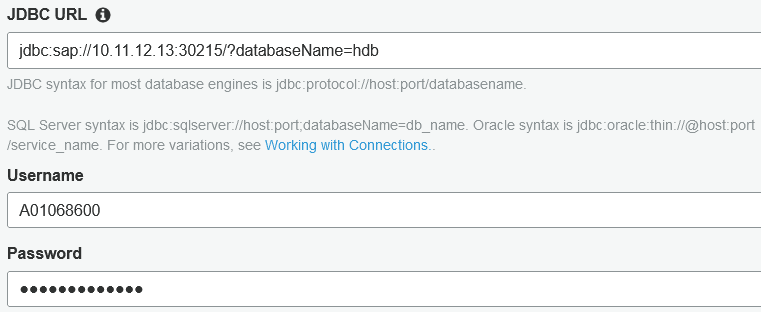

On next screen, type your JDBC url for your database connection. Here is mine with changed IP and port than real database connection details as a sample for you:

jdbc:sap://10.11.12.13:30215/?databaseName=hdb

The next parameters for the Glue Connection is network settings.

This part is important because we will be defining the network settings where the Glue job will be created and executed and the security group settings allowing network traffic to target HANA database connection via port allowance.

We have already defined our VPC, subnet and security group selections in previous steps. We will be using them in this step now.

Review the settings on following step and complete Glue connection definition.

Configure AWS Glue Job Properties

On AWS Glue dashboard, on this step we will create a new Glue job.

Click on Jobs from left menu.

Click on "Add job" button.

On job properties page, type a name like HANAConnectionGlueJob for the Glue job.

Choose the IAM role you have created in previous steps, IAMRoleForGlue2HANAConnection.

Keep default selection Spark for type

Choose the selection option "A new script to be authored by you"

For script file name type Glue job name

For S3 path where the script is going to be stored, use the browser and choose the Amazon S3 bucket s3://awsglue-saphana-connection we have created in previous steps.

For temporary directory, you can select the same S3 location

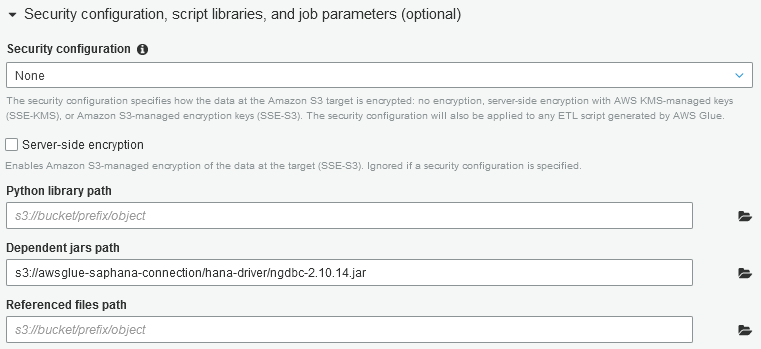

Extend "Security configuration, script libraries, and job parameters (optional)" section.

For "Dependent jars path" select the HANA database JDBC driver file which we have uploaded into Amazon S3 bucket before.

Here is what I entered by using the directory browser: s3://awsglue-saphana-connection/hana-driver/ngdbc-2.10.14.jar

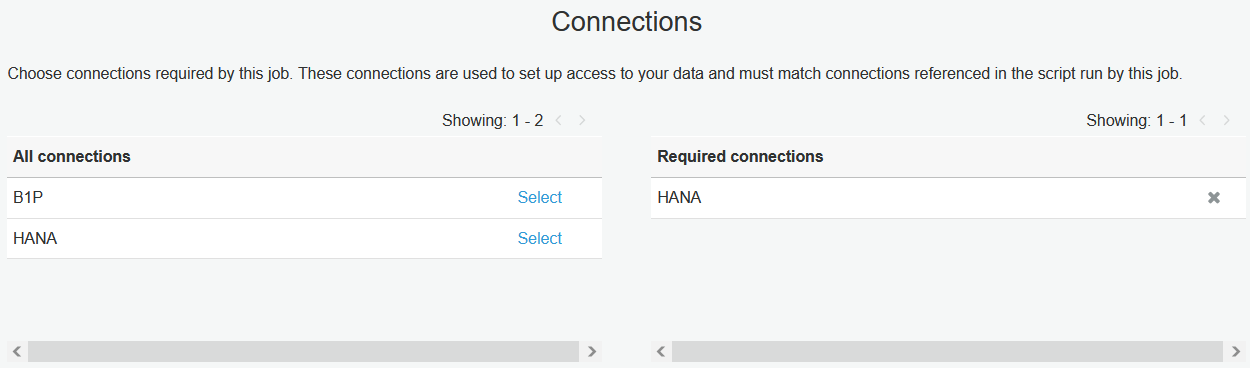

Continue to Next screen where we will select the connection created in previous steps.

From the "All connections" list, click on the "Select" link to move the connection we named as "HANA" from all connections to "Required connections" list.

Click on "Save job and edit script" button.

Copy below code and paste into script editor window

In the following code, developers will see there are a number of column mappings from the target HANA database table or view.

Please note that it is not required to use all the columns in the list, developers can choose required columns and use them only in the mapping list to bring them into the output file from its source on HANA database.

import sys

import boto3

import json

from awsglue.transforms import *

from awsglue.utils import getResolvedOptions

from pyspark.context import SparkContext

from awsglue.context import GlueContext

from awsglue.dynamicframe import DynamicFrame

from awsglue.job import Job

## @params: [JOB_NAME]

args = getResolvedOptions(sys.argv, ['JOB_NAME'])

sc = SparkContext()

glueContext = GlueContext(sc)

spark = glueContext.spark_session

job = Job(glueContext)

job.init(args['JOB_NAME'], args)

db_username = 'A01068462'

db_password = '********'

db_url = 'jdbc:sap://10.11.12.13:30215/?databaseName=hdb'

table_name = '_SYS_BI.BIMC_DESCRIPTIONS'

jdbc_driver_name = 'com.sap.db.jdbc.Driver'

s3_output = 's3://awsglue-saphana-connection/output/'

# Connecting to the source

df = glueContext.read.format("jdbc").option("driver", jdbc_driver_name).option("url", db_url).option("dbtable", table_name).option("user", db_username).option("password", db_password).load()

df.printSchema()

datasource0 = DynamicFrame.fromDF(df, glueContext, "datasource0")

# Defining mapping for the transformation

applymapping2 = ApplyMapping.apply(frame = datasource0, mappings = [("SCHEMA_NAME", "varchar","SCHEMA_NAME", "varchar"),("QUALIFIED_NAME", "varchar","QUALIFIED_NAME", "varchar"),("ID", "varchar","ID", "varchar"),("LANG", "varchar","LANG", "varchar"),("DESCRIPTION", "varchar","DESCRIPTION", "varchar"),("ORIGINAL_SCHEMA", "varchar","ORIGINAL_SCHEMA", "varchar")], transformation_ctx = "applymapping1")

resolvechoice3 = ResolveChoice.apply(frame = applymapping2, choice = "make_struct", transformation_ctx = "resolvechoice3")

dropnullfields3 = DropNullFields.apply(frame = resolvechoice3, transformation_ctx = "dropnullfields3")

# Writing to destination

datasink4 = glueContext.write_dynamic_frame.from_options(frame = dropnullfields3, connection_type = "s3", connection_options = {"path": s3_output}, format = "csv", transformation_ctx = "datasink4")

job.commit()

Above code connects to HANA database and reads table data from BIMC_DESCRIPTIONS from _SYS_BI schema.

Then Glue job writes data into S3 bucket output folder.

Now AWS Glue developers can test the Glue job by running it.

I hope this AWS Glue tutorial is useful to understand the solution structure which can be applied to not only for SAP HANA database connections but to other data platforms which are not listed in build-in supported Glue connections